An MCP Primer for Platform Partnerships Teams

Integration patterns we’ve seen before are about to repeat at unprecedented speed and scale.

If you’re managing platform partnerships, you’ve probably already heard about MCP (Model Context Protocol). You’ve also probably wondered what impact it might have on your strategy, partnerships, and day-to-day. So I thought it might be helpful to break down what MCP is, what it isn’t, and what it could mean for partnership leaders.

What MCP is

MCP is Anthropic’s relatively new open standard that lets AI assistants like Claude and ChatGPT connect to external sources of context.

Think of it as a standardized way for AI systems to “talk to” your existing data and artefacts without requiring custom integration work for every AI interface.

Instead of building separate integrations for Claude, ChatGPT, and every other AI assistant out there, platforms (or third-party developers) build MCP servers that work with all of them. It’s like a universal adapter - similar to USB - for AI platforms.

MCP servers typically expose three types of capabilities:

“Tools” - functions the AI can call (example: HubSpot’s “create_contact” or Linear’s “create_issue”)

“Resources” - data the AI can read (example: SQL databases, GitHub repository files)

“Prompts” - templates that help the AI interact effectively (example: “Generate a sales email using this contact's interaction history”)

“Tools” are typically the most used capabilities, and typically interact with existing platform APIs to search, read, write, enrich and change data.

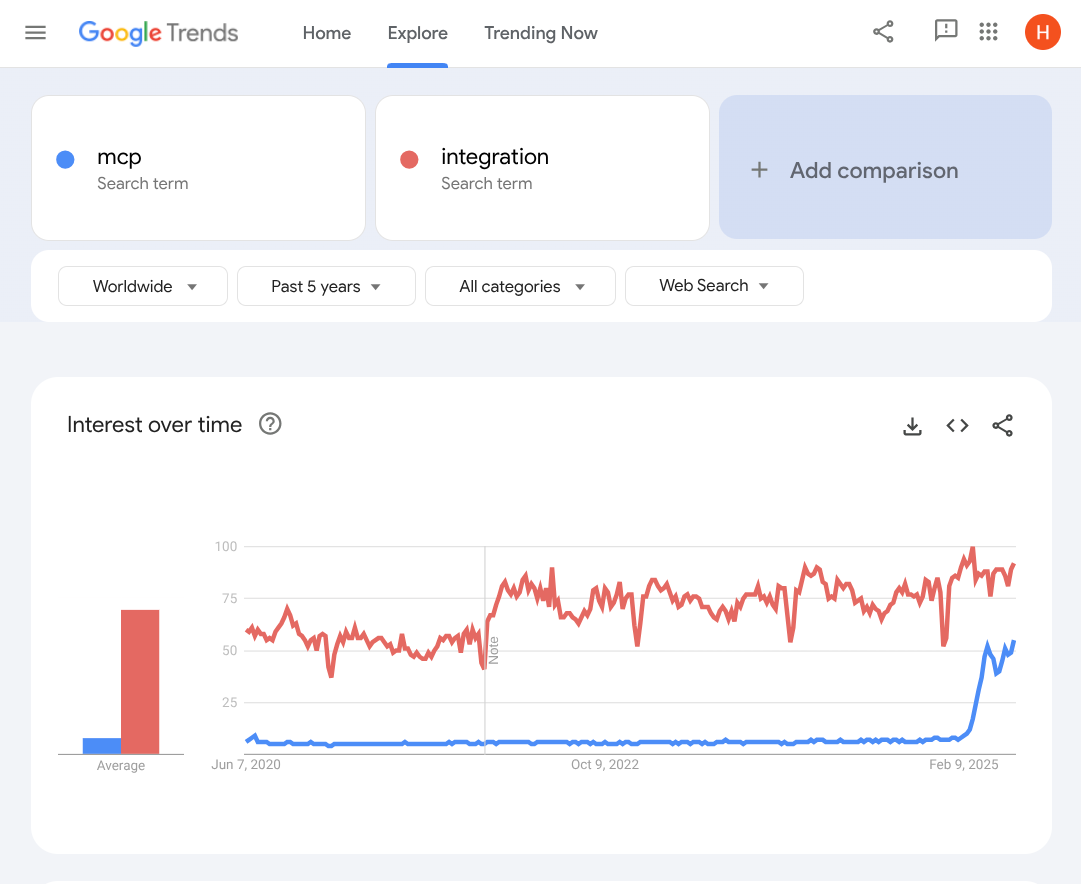

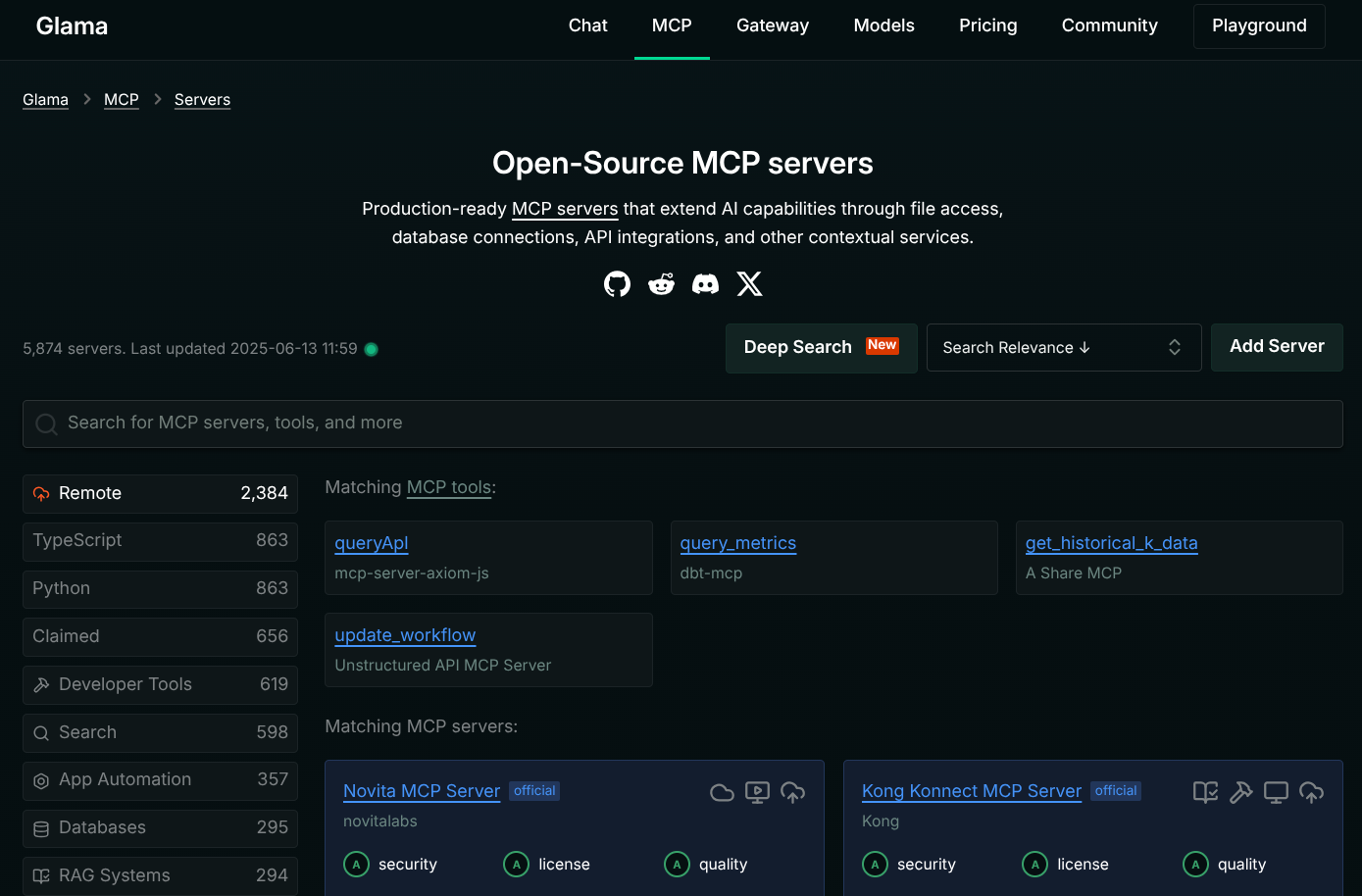

Adoption of MCP is growing fast. Launched in November 2024, there are already thousands of MCP servers listed in directories like Glama, MCP.so, and Composio.

Major platforms like GitHub and HubSpot recently released their own MCP servers.

Even OpenAI - an Anthropic competitor - adopted the MCP protocol across their products, in part because the broader ecosystem benefits of a standardized protocol outweighed the competitive risks of adopting a rival’s standard.

What MCP isn't

MCP isn’t a magic solution to a lack of API coverage or extensibility. It is - for now at least - essentially a standardized wrapper around existing platform APIs. If your platform doesn’t expose data or actions you need via API, MCP might not be able to access them in a structured, reliable way either.

“Tools” for searching and browsing websites are available to MCP, but this type of unstructured data is often slower to access and harder to predict the availability of.

So MCP won't solve your API coverage gaps, but it will make them more visible to customers expecting strong integrations with AI products and features. As more customers rely on AI assistants as their primary interface, they’ll naturally demand the ability to interact with more data in more systems.

MCP also isn’t a replacement for existing integrations and partners. If anything, it augments them and opens them up to more use cases. It's like a new distribution channel that works alongside your existing partner ecosystem, not instead of it.

The ISV opportunity

For ISVs and SaaS platforms, MCP offers new ways to make their products “AI-native” quickly. A project management tool integrated through an MCP server suddenly becomes accessible to every AI assistant, potentially reaching millions of new users.

HubSpot recently launched the first CRM deep research connector with ChatGPT, enabling sales teams to analyze customer data and identify new opportunities using natural language within ChatGPT. Linear created MCP servers so AI assistants can create tickets, update project status, and manage development workflows directly.

The near-term opportunity is the creation of additional utility value for customers. Which creates incremental distribution value for ISVs and platforms. Getting your product embedded where users increasingly spend their time is a win for everyone.

The MCP advantage for AI platforms

There are obviously benefits for AI platforms like Anthropic and OpenAI, too. MCP solves a critical limitation - AI assistants perform best when they can interact with and act on real business data and perform real business actions.

Just in the past few months, MCP servers have been launched by:

CRMs (like Salesforce and HubSpot), enabling AI assistants to interact or create customer data and automation flows

E-commerce platforms (like Shopify and BigCommerce), enabling AI assistants to automate inventory and order management

HRIS systems (like Workday and BambooHR), enabling AI assistants to interact with and analyze employee data

By connecting to enterprise systems of record, AI assistants start to generate tangible productivity gains quickly. When Claude can create Salesforce opportunities, update HubSpot contacts, or process Shopify orders through MCP connections, it transforms these interfaces from chatbots into business automation platforms in their own right.

The integration acceleration effect

Here’s where MCP gets interesting for partnerships teams: it can accelerate the development of AI capabilities across partner products - and your own.

Historically, new integration development followed a predictable pattern. Customers request integrations, partners request APIs to build them, product teams add these requests to a backlog, and - often months or quarters later - the integration exists.

MCP changes this dynamic because fast AI platform adoption creates immediate market pressure.

When every user of every AI assistant expects the products and platforms they already use to integrate with them, API development - for AI use cases - gets prioritized. When competitors quickly launch comprehensive MCP servers, internal debates about prioritizing API coverage and extensibility get resolved quickly.

We’re seeing this play out in real-time.

Platforms that historically had limited extensibility and API coverage are expanding their programmatic surfaces quickly - specifically to support AI integration use cases.

What this could mean for platform partnerships

If you’re managing partnerships for B2B software products and platforms, MCP creates opportunities and requirements.

The opportunities are the ability to create utility value for customers - and the ability to create new distribution value - through AI assistants and platforms.

The requirements are platform and product changes required to ensure your products remain relevant as AI assistants become a primary interface in the world of work.

MCP adoption timelines are compressed compared to previous platform waves. Companies leaning into AI assistant integrations - through MCP - will enjoy significant ecosystem advantages as AI assistant usage grows.

Integration patterns we’ve seen before - where fully integrated customers retain better, and expand faster - are about to repeat at unprecedented speed and scale.

If you enjoyed reading this post - or would like to ask me a question - comment below or reach out to me on LinkedIn.