How To Build Your First Partnerships Agent (Step By Step)

Building simple AI agents is easy - and it’s getting easier by the day.

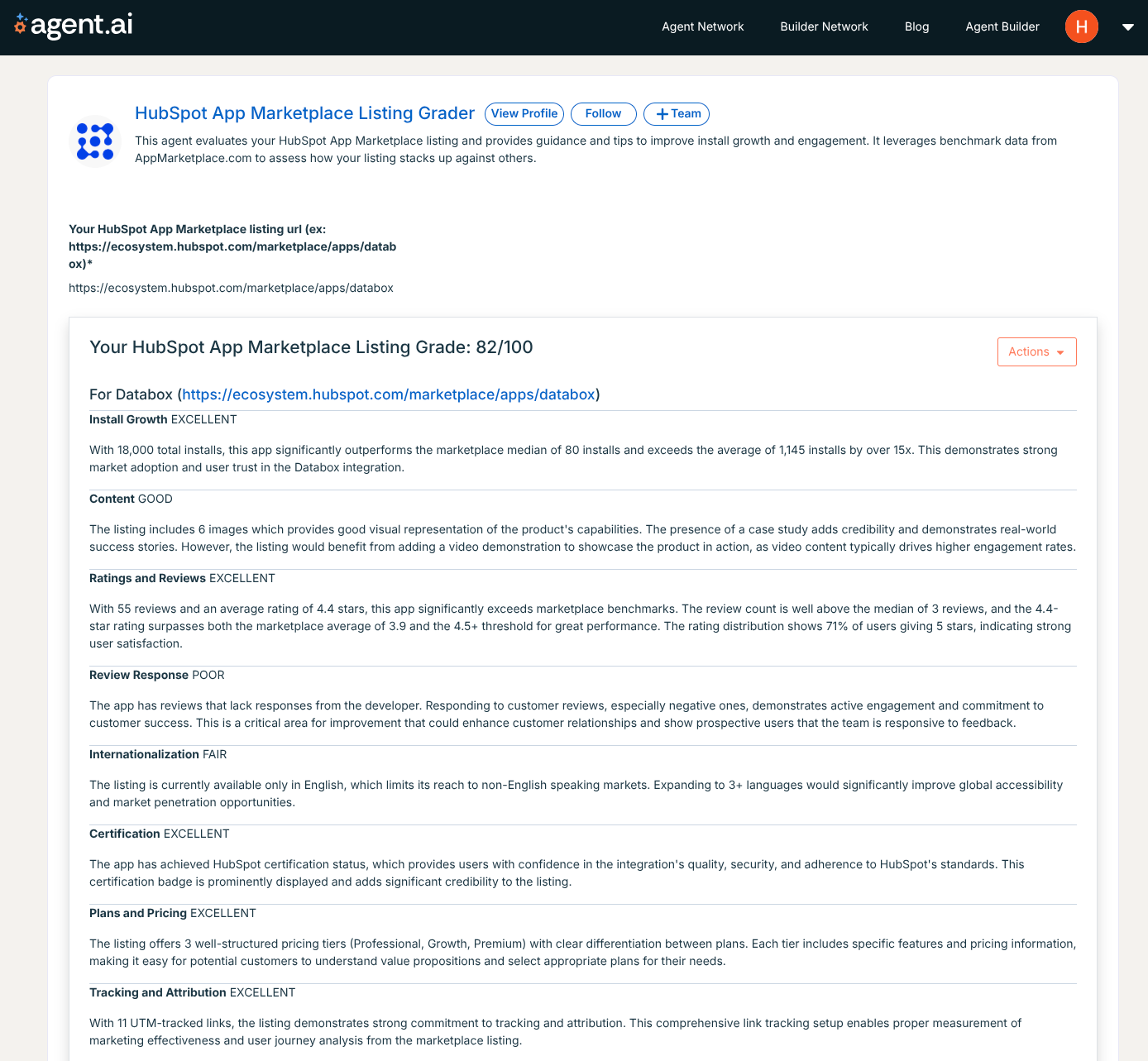

Earlier this year I quickly created and launched something I wish I’d had when building HubSpot’s App Partner Program: a self-serve tool for partners to evaluate the quality of their listings in the HubSpot App Marketplace.

HubSpot App Marketplace Listing Grader has completed over 10,500 runs with a 4.4/5 rating from 1,130+ reviews. It’s simple, but it works. The best bit? I built it in the same amount of time it took to drink a Venti Americano at Starbucks - it really was that easy.

What we’re actually building

HubSpot App Marketplace Listing Grader is built on Dharmesh’s Agent.ai platform, but it should be be just as easy to build it with other agent builders like Zapier or n8n. Much of this could also be built as an OpenAI GPT or a Claude-powered app.

And, before we dive in, let’s be clear: I’m calling this an “agent” but it’s more like a workflow that calls multiple LLMs. It’s not super agentic by Anthropic’s well defined standards, but it’s a solid foundation for becoming properly agentic in future.

Here’s how I built this agent, and how to approach building yours.

Step 1: Define your problem (and keep it simple)

When building AI agents, the most important step happens before you touch any code or agent builder. First, you need to clearly articulate the problem you’re solving and resist the urge to build something overly complex.

In this case, the problem is straightforward: HubSpot’s 1,200+ App Partners lack 1:1 guidance on listing their apps effectively in the marketplace. They build great apps and integrations but create sub-par listings that don’t convert into usage or revenue.

The solution needed to be equally straightforward: a simple grader that takes their HubSpot App Marketplace listing URL, crawls it, evaluates it against best practices and benchmarks, and returns actionable recommendations for partners.

It’s a holistic evaluation that includes content quality, review responsiveness, certification status, plans and pricing completeness, and tracking/attribution setup.

Step 2: Map your inputs and outputs

Once the problem definition is clear, think through the user journey and technical flow. You’ll need to understand the context you’ll need, the LLMs you’ll use to analyse and interpret that context, and the output format of the agent.

It’s important to provide relevant, high quality context to agents and LLMs so they answer or act in helpful, factually correct, coherent and complete ways.

For the HubSpot App Marketplace Listing Grader, the input is super simple: a HubSpot App Marketplace listing URL. That’s it. No complex forms, no multi-step wizards, no prompts. Paste a URL, hit submit, and get recommendations.

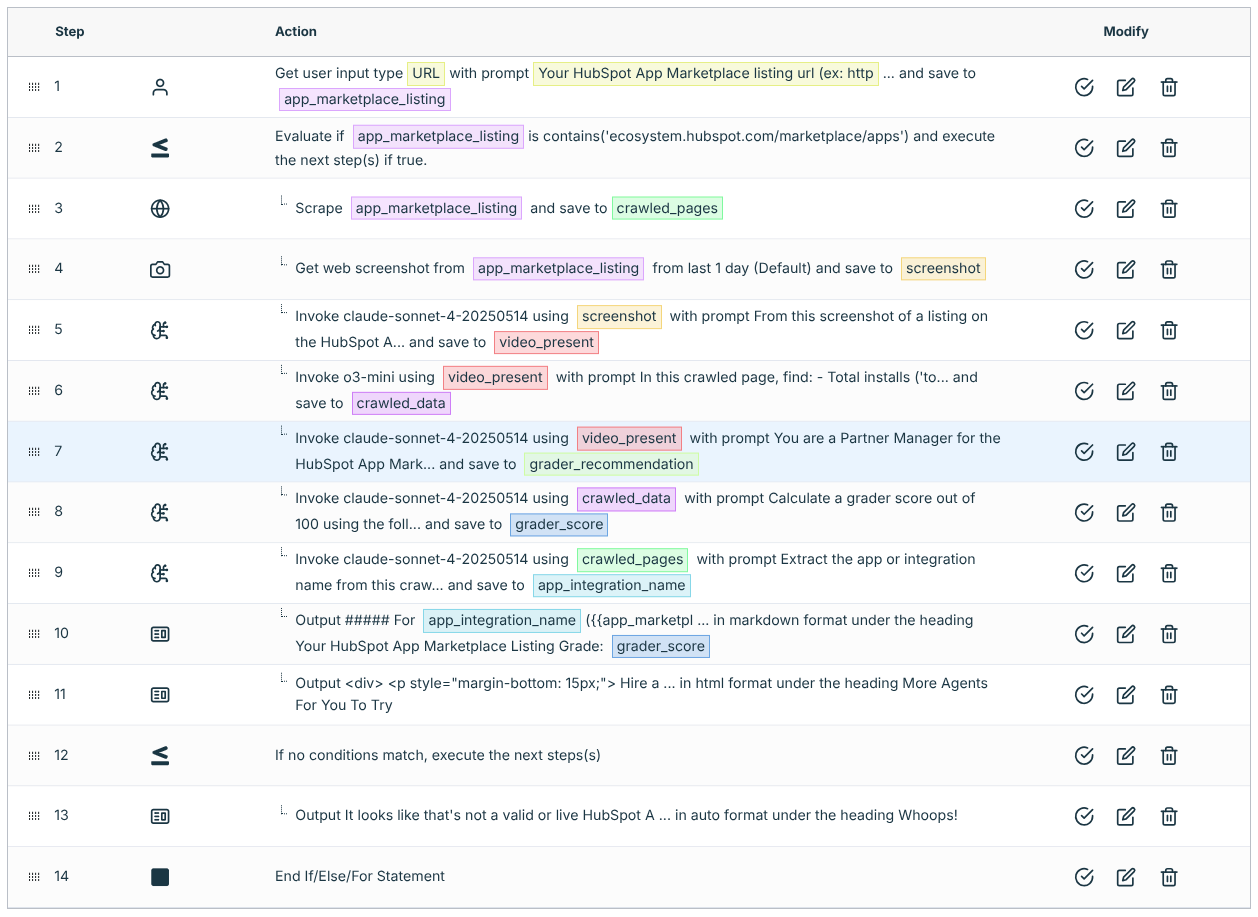

The routing logic is basic - checking first if the URL actually resolves to a valid listing. You could get much fancier here later with conditional flows based on app category, certification status, etc, but starting simply helps validate the core concept first.

For triggers, I chose manual submissions through Agent.ai’s interface, but most agent builders support webhooks, scheduled tasks, etc. It’s usually best to lay foundations to support multiple trigger types without over-engineering the initial version.

The output of the agent is a graded analysis with specific, actionable recommendations. Future versions of the agent could start to act on these recommendations for you - like drafting review responses, drafting new content, etc.

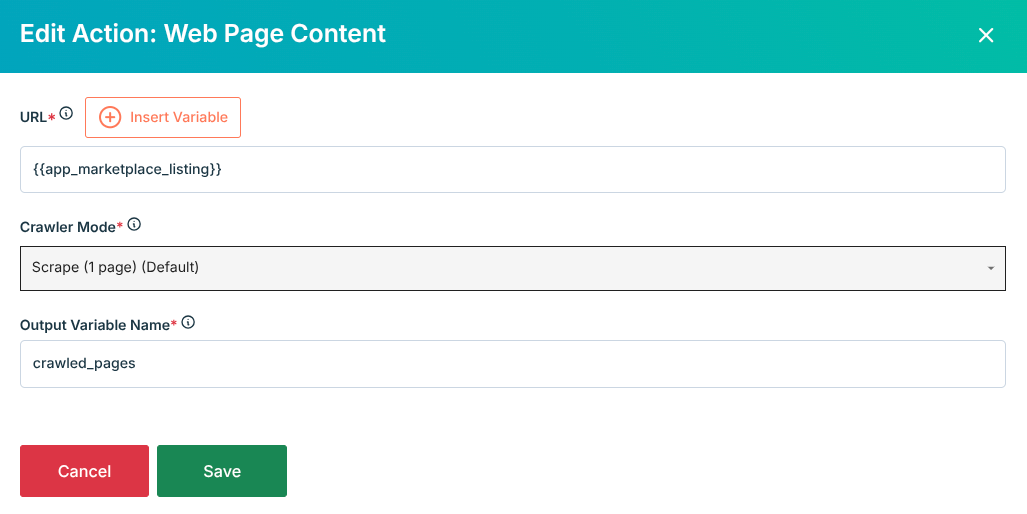

Step 3: Grab and prep your data

This is where the technical setup really starts. In this case we’re grabbing data from the web (by crawling the HubSpot App Marketplace listing) but - depending on the agent you’re building - you might want to connect to other data sources too.

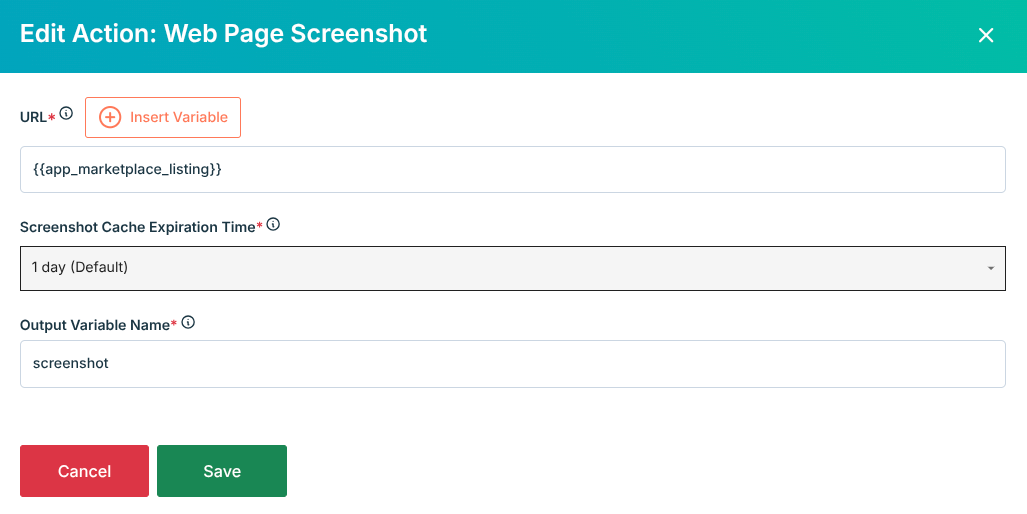

Crawling the HTML of the URL extracts the raw text content, meta tags, structured data, and basic page elements. But HTML parsing alone isn’t always enough - pairing it with a visual screenshot of the listing provides a more complete view.

That’s why the agent takes a full-page screenshot of every URL. The screenshot captures what users actually see when they visit the listing, including visual hierarchy, broken images, and multimedia content that might not be evident from HTML alone.

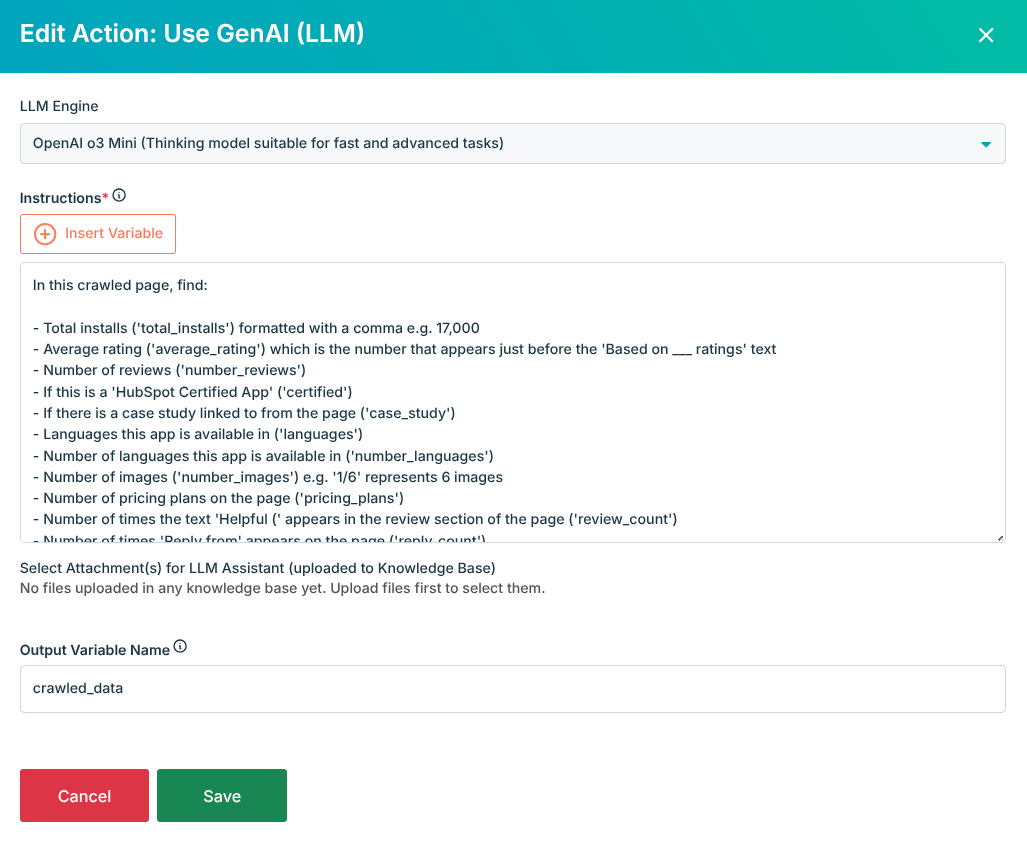

For the initial data extraction, I used OpenAI o3-mini - it’s fast and cheap for simple tasks like extracting structured content from unstructured blobs. This is the prompt:

In this crawled page, find:

- Total installs ('total_installs') formatted with a comma e.g. 17,000

- Average rating ('average_rating') which is the number that appears just before the 'Based on ___ ratings' text

- Number of reviews ('number_reviews')

- If this is a 'HubSpot Certified App' ('certified')

- If there is a case study linked to from the page ('case_study')

- Languages this app is available in ('languages')

- Number of languages this app is available in ('number_languages')

- Number of images ('number_images') e.g. '1/6' represents 6 images

- Number of pricing plans on the page ('pricing_plans')

- Number of times the text 'Helpful (' appears in the review section of the page ('review_count')

- Number of times 'Reply from' appears on the page ('reply_count')

- Number of links to the partners' website with UTM tracking ('utm_count')

Prepend the relevant field name (e.g. total_installs). Do not append or prepend any other text.

Binary choices like certified and case_study should be "True" or "False."

If average_rating is not available return 0.

{{video_present}}

{{crawled_pages}}The simple prompt instructs the LLM to extract specific elements like total installs, average rating, certification status, number of images, number of pricing plans, and whether or not reviews have been responded to.

The more structured the data, the better the analysis and recommendations will be.

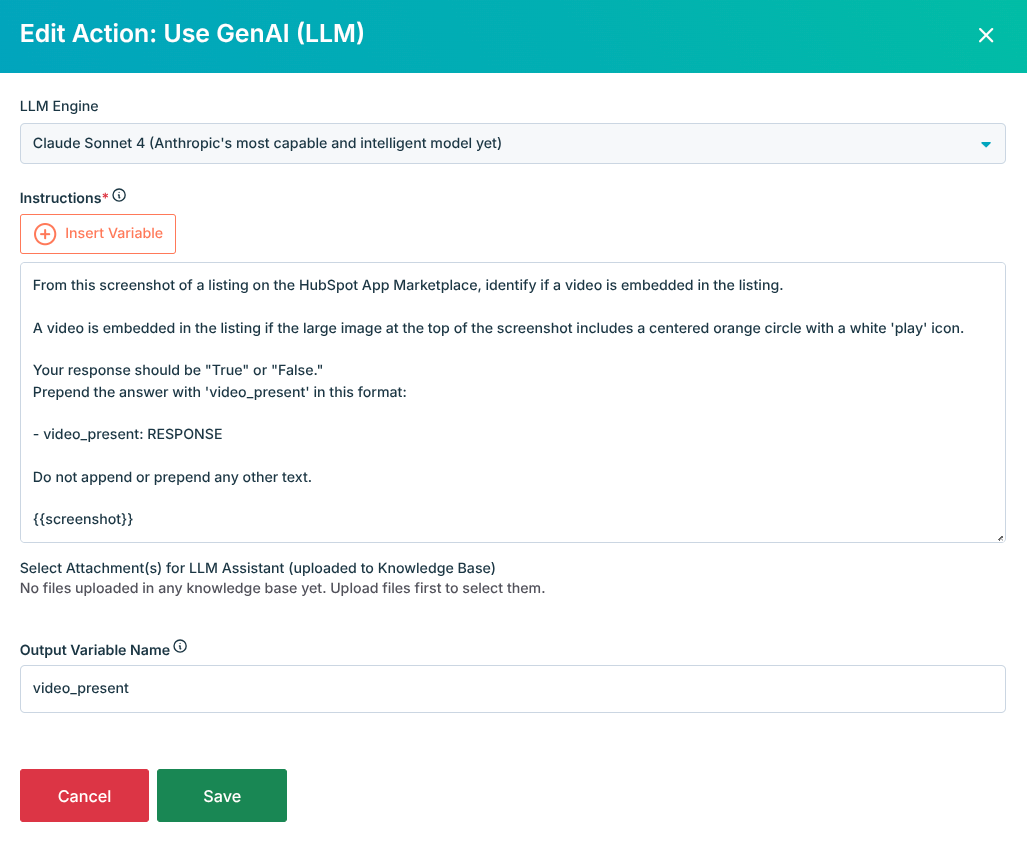

Next, I used Claude Sonnet 4’s multimodal capabilities to analyze and extract structured content from the screenshot. This is the prompt:

From this screenshot of a listing on the HubSpot App Marketplace, identify if a video is embedded in the listing.

A video is embedded in the listing if the large image at the top of the screenshot includes a centered orange circle with a white 'play' icon.

Your response should be "True" or "False."

Prepend the answer with 'video_present' in this format:

- video_present: RESPONSE

Do not append or prepend any other text.

{{screenshot}}Specifically, it identifies whether or not a video is embedded in the listing - a signal that’s harder to extract from the raw HTML.

Step 4: Generate analysis and recommendations

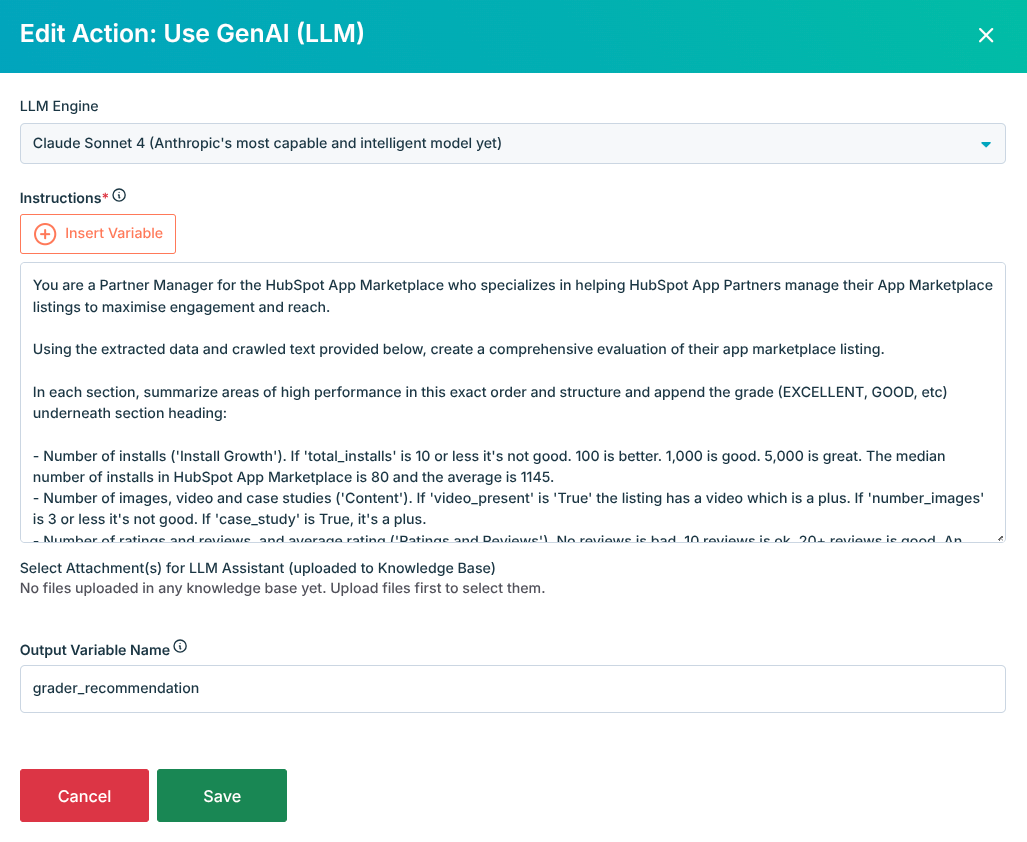

This is where the real value gets created. I use Claude Sonnet 4 to generate analysis and recommendations - it’s fantastic at recognising patterns in tabular/time series data, has a long-context window, and can toggle into deeper reasoning when required.

The analysis happens in three steps that could be parallelized simultaneously, but are in a step-sequence in this example.

First, a comprehensive evaluation of listing content and performance data against marketplace best practices and benchmarks. The prompt includes specific criteria for install growth, content completeness, ratings and reviews, and more. Here it is:

You are a Partner Manager for the HubSpot App Marketplace who specializes in helping HubSpot App Partners manage their App Marketplace listings to maximise engagement and reach.

Using the extracted data and crawled text provided below, create a comprehensive evaluation of their app marketplace listing.

In each section, summarize areas of high performance in this exact order and structure and append the grade (EXCELLENT, GOOD, etc) underneath section heading:

- Number of installs ('Install Growth'). If 'total_installs' is 10 or less it's not good. 100 is better. 1,000 is good. 5,000 is great. The median number of installs in HubSpot App Marketplace is 80 and the average is 1145.

- Number of images, video and case studies ('Content'). If 'video_present' is 'True' the listing has a video which is a plus. If 'number_images' is 3 or less it's not good. If 'case_study' is True, it's a plus.

- Number of ratings and reviews, and average rating ('Ratings and Reviews'). No reviews is bad, 10 reviews is ok, 20+ reviews is good. An average rating of 4+ is good, 4.5+ is great. The median number of reviews in HubSpot App Marketplace is 3 and the average rating is 3.9.

- Response rate to most recent 10 reviews ('Review response'). If 'reply_count' is less than 'review_count', recommend that the user replies to reviews. Do NOT include the 'reply_count' and 'review_count' metrics in the response - just say they have reviews that lack a response. If 'reply_count' is the same or more than 'review_count' it's EXCELLENT.

- Languages the listing is available in ('Internationalization'). One language is standard, 3+ is good, 5+ is great.

- Certification status ('Certification'). If the 'certified' value is false, recommend the user submits their app for certification.

- Completeness of plans and pricing ('Plans and Pricing'). If the 'pricing_plans' value is 1 it's bad, 3 plans is good, 3+ is great.

- Link tracking setup ('Tracking and Attribution'). If 'utm_count' is 0 it's bad, 3 links is good, recommend all links are tracked.

Guidelines:

- Use specific numbers from the data throughout

- Keep recommendations ordered as above

- Focus on concrete, actionable insights

- Base recommendations on HubSpot App Marketplace listing guidelines

- Separate each result with a horizontal line for visual clarity

- Don't include an overall header in the response

- Keep all font sizes the same, bold header text only

- Format the output as markdown

This is the extracted data:

{{video_present}}

{{crawled_data}}

This is the crawled text:

{{crawled_pages}}It also generates specific recommendations to improve listing quality. These aren’t generic suggestions - they outline steps to take, and why to take them. Each section and recommendation is graded from ‘POOR’ to ‘EXCELLENT’ - super simple.

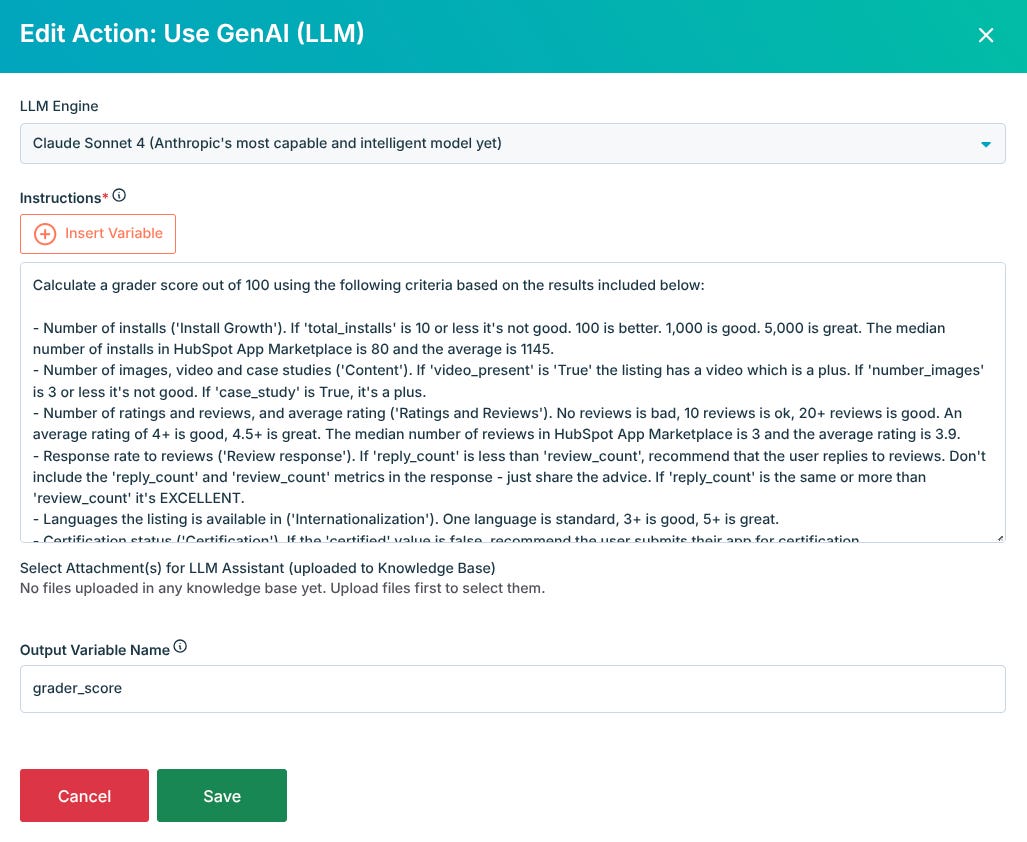

Second, a separate call to Claude Sonnet 4 calculates a numerical score out of 100. This might seem arbitrary, but partners love having a concrete number to track improvements. Scoring considers all the factors mentioned above. This is the prompt:

Calculate a grader score out of 100 using the following criteria based on the results included below:

- Number of installs ('Install Growth'). If 'total_installs' is 10 or less it's not good. 100 is better. 1,000 is good. 5,000 is great. The median number of installs in HubSpot App Marketplace is 80 and the average is 1145.

- Number of images, video and case studies ('Content'). If 'video_present' is 'True' the listing has a video which is a plus. If 'number_images' is 3 or less it's not good. If 'case_study' is True, it's a plus.

- Number of ratings and reviews, and average rating ('Ratings and Reviews'). No reviews is bad, 10 reviews is ok, 20+ reviews is good. An average rating of 4+ is good, 4.5+ is great. The median number of reviews in HubSpot App Marketplace is 3 and the average rating is 3.9.

- Response rate to reviews ('Review response'). If 'reply_count' is less than 'review_count', recommend that the user replies to reviews. Don't include the 'reply_count' and 'review_count' metrics in the response - just share the advice. If 'reply_count' is the same or more than 'review_count' it's EXCELLENT.

- Languages the listing is available in ('Internationalization'). One language is standard, 3+ is good, 5+ is great.

- Certification status ('Certification'). If the 'certified' value is false, recommend the user submits their app for certification.

- Completeness of plans and pricing ('Plans and Pricing'). If the 'pricing_plans' value is 1 it's bad, 3 plans is good, 3+ is great.

- Link tracking setup ('Tracking and Attribution'). If 'utm_count' is 0 it's bad, 3 links is good, recommend all links are tracked.

Guidelines:

- Do not append or prepend any text to the score. Return the score /100 only.

Results:

{{crawled_data}}The key to good recommendations is being specific enough that a partner can take immediate action without needing additional research or interpretation.

As I mentioned earlier, future versions of the agent could invoke other agents to act on each recommendation.

Step 5: Package and deliver results

The final step presents the analysis in a format that’s immediately useful and shareable. In this case, the output is simple - but future versions could include charts, screenshots of specific areas of the listing to improve, etc.

The entire process takes about 30 seconds from URL submission to final report - fast enough to feel instant but thorough enough to provide genuine value. The results are shareable through the Agent.ai interface, which has been crucial for adoption since partners can easily forward reports to colleagues and partner managers.

What makes this particularly effective is that partners get immediate feedback instead of waiting weeks for human review. They can iterate on their listings in real-time and rerun the analysis to track improvements.

This creates a feedback loop that drives actual behavior change rather than one-time recommendations that get forgotten.

Quick tips for writing better prompts

The prompts I’ve used are far from perfect, but they’re more than good enough. Here’s some tips based on what I’ve learned building hundreds of LLM-powered workflows and AI agents.

Keep agents and tasks focused. Tell agents and LLMs your desired outcomes, and what you want to achieve. Set clear limits, including response length, format, etc.

Use LLMs to write better prompts. Seriously. Ask specific Claude or ChatGPT models how to help you craft better prompts they’ll interpret and understand.

Structure everything. Use roles, step-by-step thinking, and markdown/XML tags to organize, optimize and manage your prompts.

Set boundaries. Define constraints upfront - what the agent should and shouldn’t do. Some models don’t perform better if they’re told what not to do (others don’t).

That’s it!

This simple agent has saved partner managers of hours of manual app reviews and has given partners instant feedback instead of waiting weeks for human review.

Next time you’re manually reviewing partner submissions or answering the same partner questions repeatedly, think through how you can delegate these tasks to your own custom-built fleet of AI agents.

They’re super easy to build - and it’s getting easier by the day.

If you enjoyed reading this post - or would like to ask me a question - comment below or reach out to me on LinkedIn.