Scaling Context Engineering, Through Context Partnerships

How partnerships can capture a meaningful slice of a multi-trillion-dollar transformation.

In the early days of Facebook’s Ads API ecosystem, most partner-built products were essentially “wrappers” built upon our nascent Ads API.

At the time, our native ad buying interfaces were under-invested and not fit for purpose for large advertisers. Facebook offered a “power user” tool, Power Editor, which large advertisers used - but it came in the form of a brittle Chrome plugin.

It had half an engineer working on it, and you could tell. It crashed a lot.

Advertisers even had to “download” their ad accounts into their browser before they could use it - it was that basic. Advertisers often forgot to “upload” changes back to Facebook before they closed their browser. Many, many tears were shed as a result.

So, early Ads API partners pursued the lowest-hanging fruit - everything Facebook should have built, but hadn’t yet. Tools to upload bulk creatives, quickly duplicate ads and campaigns, and access insights to improve budget allocation and targeting.

These Ads API “wrappers” added value. Something was better than nothing.

But, as a newly-listed public company reliant on advertising revenue, that reality wouldn’t last long. Our product and engineering teams were already planning better ad products, and better “built by Facebook” interfaces to buy them through.

We shared this new reality with our partners. Many listened, but many didn’t.

The partners who didn’t listen became builders and sellers of similar - commoditized - ad buying interfaces. And each of them was now competing with another similar - and free to use - ad buying interface offered by Facebook. It was a race to the bottom.

Many of these partners, unfortunately, didn’t survive.

Those that did listen became some of our most successful Ads API partners. Over the next few years companies like Salesforce, Smartly, and StitcherAds “touched” tens of billions of dollars of Facebook ads revenue, became hugely profitable businesses, and generated multi-million dollar outcomes for their employees and investors.

The difference between partners who listened, and those that didn’t? Context.

The context gap

The partners who listened survived and thrived because they looked beyond the walls of Facebook’s own Ads API to create utility value for customers. Salesforce integrated their Social AdTech product with their CRM, enabling advertisers to reach existing customers - and find new ones - on Facebook, through custom audiences.

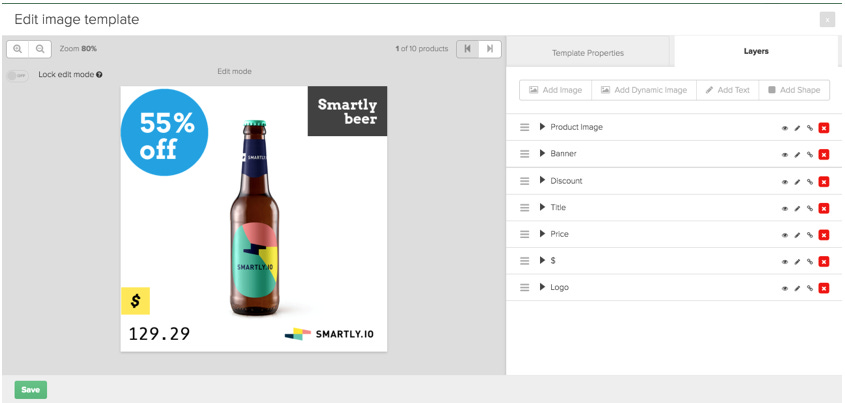

Partners like Smartly and StitcherAds integrated feeds from ecommerce platforms, turning static product images into dynamic video ads. They helped merchants use offline data to target campaigns effectively online. They offered server-to-server integrations to feed signals to Facebook’s auction engine, improving performance.

They turned latent data into meaningful context, which produced much better results.

If context was important to Facebook’s advertisers then, it’s even more important today to drive adoption of AI in every function in every business. AI can positively impact every business, if it’s implemented and integrated in the right ways.

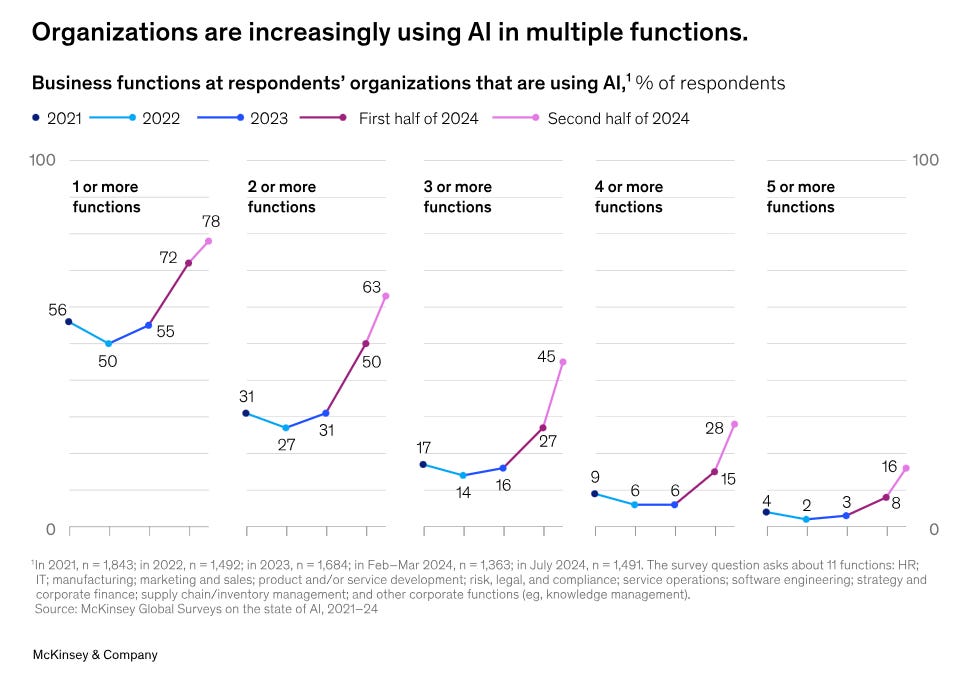

78% of respondents to a recent McKinsey survey said they use AI in at least one business function, up from 55% a year before. That’s impressive - but one business function is one of many. AI has a long way to go before it’s as ubiquitous as email.

Depending on the organization - and functions within it - we spend much of our day creating and consuming content across multiple modalities. We create and share presentations, charts, and dashboards. We snap photos of receipts and hunt down PDFs to file expense reports. We collaborate in folders, repos, and Slack channels.

Yet, most people still use AI assistants like ChatGPT, Claude, and Microsoft's Copilot for simple research tasks. And most output from these tasks is still in text form.

To scale adoption across multiple organizational functions and move beyond simple text outputs, we need to provide LLMs with the right tools and knowledge to understand and create content across modalities. Building simple "wrappers" around LLMs isn't enough anymore. To move beyond text, LLMs need context.

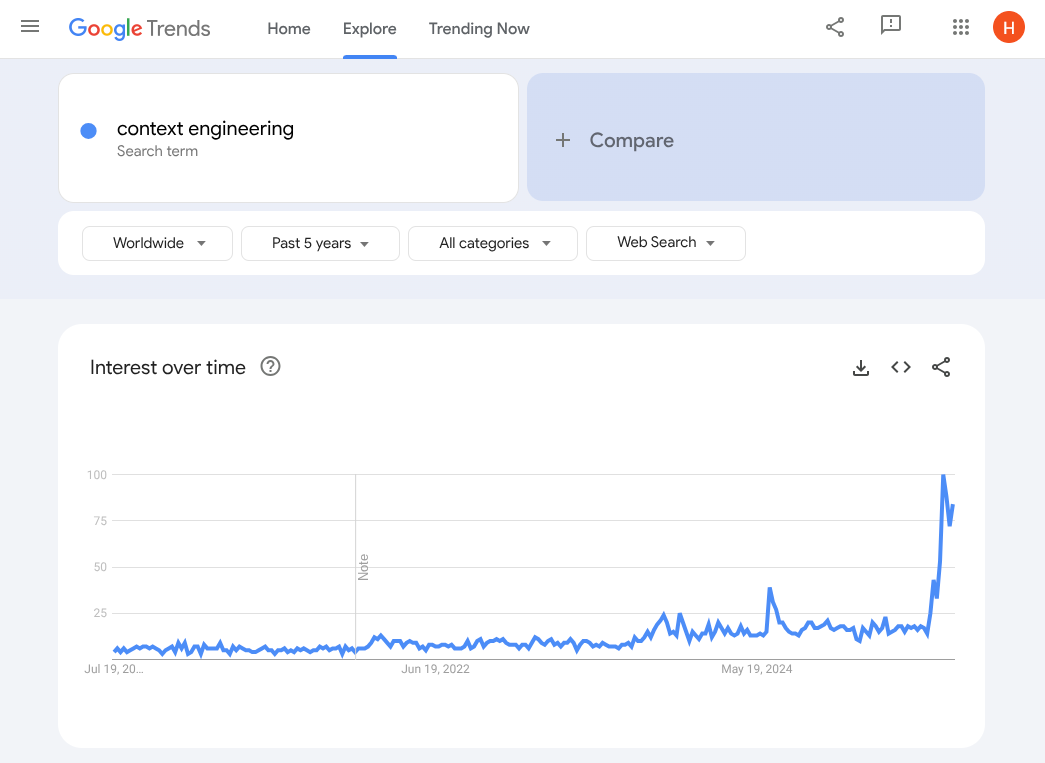

Hence the emerging demand for “context engineering.”

From prompt engineering, to context engineering

Most of our early interactions with LLMs - or AI assistants built upon them - happened through prompts. Better prompts, better results. The process of refining prompts to extract high quality responses from LLMs was quickly coined "prompt engineering."

But great prompts are never enough. LLMs don’t know everything about your business.

When you give LLMs access to relevant context, you go much further, much faster.

Enter context engineering, an emerging discipline of providing AI systems with precisely the right information, tools, and data - at the right time.

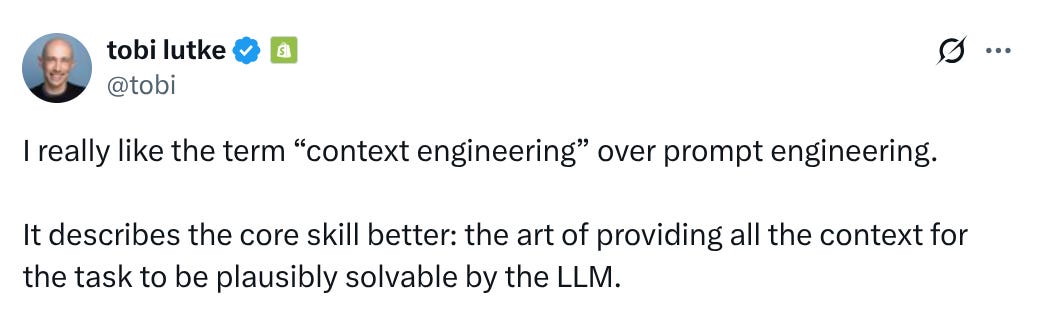

Tobi Lutke, CEO of Shopify, recently captured the importance of context engineering:

Andrej Karpathy, co-founder of OpenAI, built on Tobi’s tweet, illustrating concrete examples of tasks an "LLM app" needs to take to create high quality outcomes:

“On top of context engineering itself, an LLM app has to:

- break up problems just right into control flows

- pack the context windows just right

- dispatch calls to LLMs of the right kind and capability

- handle generation-verification UIUX flows - a lot more - guardrails, security, evals, parallelism, prefetching, ...”

Karpathy describes LLMs as functioning like a new operating system where the model serves as the CPU, and context windows act as RAM - but with finite capacity.

Context engineering is a delicate art of curating what fits into this "working memory."

Too much context overwhelms the system. Too little context produces generic, unhelpful responses. The wrong context at the wrong time leads to hallucinations or irrelevant outputs. To get the best outputs, you need to give LLMs the best inputs.

Is it any wonder, then, that Forward Deployed Engineers are emerging as an in-demand role in AI platform companies? They’re integration specialists - context engineers - who also understand how to bridge gaps between enterprise data and LLMs.

Context engineering, at enterprise scale

Andreessen Horowitz captured the importance of Forward Deployed Engineers in “Trading Margin for Moat: Why the Forward Deployed Engineer Is the Hottest Job in Startups.” They argue AI companies achieving massive scale are embracing complex implementation work to deeply integrate with customer systems of context.

Margin is being “traded” by a growing number of AI startups who are investing upfront in deep - often custom - AI integrations. Smaller near-term margins will translate over time into revenue that retains better and grows faster.

Forward Deployed Engineers co-ordinate the integration of APIs. But they also think about and understand business workflows, data relationships, and decision-making processes to give AI systems the context they need to be genuinely useful.

They turn latent data into meaningful context, producing much better results.

When your AI system is deeply integrated with specific context, workflows, and data relationships, switching to a competitor becomes exponentially more difficult.

Ashu Garg and Jaya Gupta of Foundation Capital recently touched on the same trend in “The $4.6T Services-as-Software opportunity: Lessons from year one.”

In their words:

“When every company can ship the same primitives using the same models, what you build is no longer your moat. How you integrate, embed, and operate becomes the moat.”

This should be music to the ears of every partnerships professional.

When AI capabilities rapidly commoditize, implementation and integration becomes the lasting differentiator - exactly the complex, relationship-driven work that partnerships teams excel at.

Foundation Capital’s research revealed that in enterprise AI, “integration is not a post-sale activity. It is the product surface.” This is a massive shift from “traditional” SaaS partnerships, where integrations were often an afterthought or nice-to-have features.

How context engineering maps to context partners

The most successful AI implementations orchestrate multiple context partners to create rich, multi-dimensional understanding.

A customer service AI solution might combine customer history from Salesforce, product documentation from Notion, conversation context from Slack, and real-time system status from monitoring tools to create comprehensive support responses.

Context engineering includes a variety of context types (which I previously covered in An MCP Primer for Platform Partnerships Teams):

“Tools” - functions the AI can call (example: HubSpot’s “create_contact” or Linear’s “create_issue”)

“Resources” - data the AI can read (example: SQL databases, GitHub repository files)

“Prompts” - templates that help the AI interact effectively (example: “Generate a sales email using this contact's interaction history”)

So, with that in mind, how can partner teams align with and rally around the right types of partners to scale the impact of context engineering?

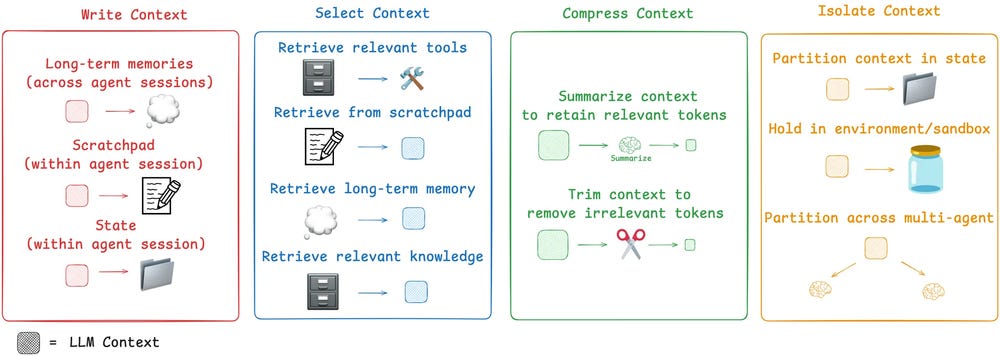

There are many potential ways to group “context” partners, but I like LangChain’s general categories of context engineering.

LangChain’s categories - Write, Select, Compress, Isolate - help us map partners into a simple architecture. Instead of building point-to-point connections between individual systems, partnerships teams can orchestrate comprehensive context ecosystems that enable AI systems to understand and act on complex business scenarios.

In LangChain’s grouping, context engineering operates across four distinct layers, each of which can be mapped to specialized partnership opportunities. Rather than viewing these as separate categories, partnerships teams should approach them as an integrated ecosystem where different types of partners work together.

Write context partners

Save context outside the context window to help an agent perform a task.

Write context partners provide persistent memory that extends beyond single interactions, including the ability to store intermediate thoughts, build institutional knowledge, and maintain conversation history across sessions.

Examples:

Zep - dedicated context engineering for AI agents

Mem0 - universal memory for AI agents

DuckDB - structured memory management

Select context partners

Pull context into the context window to help an agent perform a task.

Select context partners fetch and load relevant information into the context window, including Retrieval-Augmented Generation (RAG), dynamic tool selection, and just-in-time information delivery.

Examples:

Pinecone - fully managed vector search

Contextual.ai - enterprise grade RAG deployment

Glean - enterprise knowledge discovery

Compress context partners

Make it easy to retain only the tokens required to perform a task.

Compress context partners optimize limited context window space through summarization, trimming, and linguistic compression for maximum information density.

Examples:

LangChain - compression retrievers and LLM-based filtering

Orq.ai - context optimization and LLM management

Portkey - request optimization and context management

Isolate context partners

Split context up to help an agent perform a task.

Isolate context partners separate different contexts to prevent negative interference, including multi-agent systems with specialized context windows and sandboxing.

Examples:

CrewAI - specialized agent co-ordination

Stack AI - no-code agent isolation

Beam - agentic automation with isolated workflows

These are just examples - but there is a growing number of context partners in the market. A growing number integrate or partner with each other, and are building out their own capabilities, to offer interoperable solutions across all four context types.

Many are building their own ecosystems of Systems Integrator (SI) and ISV partners.

Building context partnerships that scale

The growth in demand for context engineering creates immediate opportunities for partnerships teams. Here’s some actionable next steps to get started:

Audit your current partner ecosystem through a context lens

Map your existing partners to LangChain’s four categories: Write, Select, Compress, and Isolate. This could reveal immediate gaps and partnership opportunities that align with your customers’ context engineering needs.

Develop context partnership criteria

Traditional partnership criteria focused on market size and technical compatibility - context partners require additional evaluation. Can partners handle enterprise data with appropriate trust and security controls? How well do their context formats integrate with your customers AI systems? Questions like these will help you identify partners who can truly scale the impact of context engineering.

Enable Systems Integrators and Global Systems Integrators (GSIs)

SIs and GSIs already deeply understand customer workflows and data relationships - exactly what context engineering requires. Train your SI/GSI partners on context engineering principles and create joint playbooks for common scenarios. Example: when an SI identifies a need for better memory management, they should immediately think of your Write context partners.

Create context partner ecosystems, not just integrations

The most valuable context implementations orchestrate multiple partners working together. Create and share reference architectures that show how different context partners can complement each other, and facilitate introductions between your Write, Select, Compress, and Isolate partners.

Context partnerships also require strong technical foundations - high-quality APIs and seamless integrations become even more critical when AI systems need to orchestrate multiple data sources and tools in real-time. I’ll explore the API and integration requirements for successful context partnerships in a future post.

The future of context partnerships

Unlike “traditional” integration partnerships, which can often focus on once-and-done integrations to unlock go-to-market opportunities, context partnerships require deep ongoing collaboration, flexibility, and the ability to react quickly to market pressure.

This creates a fundamentally different partnership dynamic.

Partners need to understand each other’s roadmaps and releases, share insights about customer needs, and collaborate closer than ever before. They need to develop new skills around data governance, AI trust and safety, and context engineering.

Those that do can capture a meaningful slice of a multi-trillion-dollar transformation.

If you enjoyed reading this post - or would like to ask me a question - comment below or reach out to me on LinkedIn.